Google MediaPipe

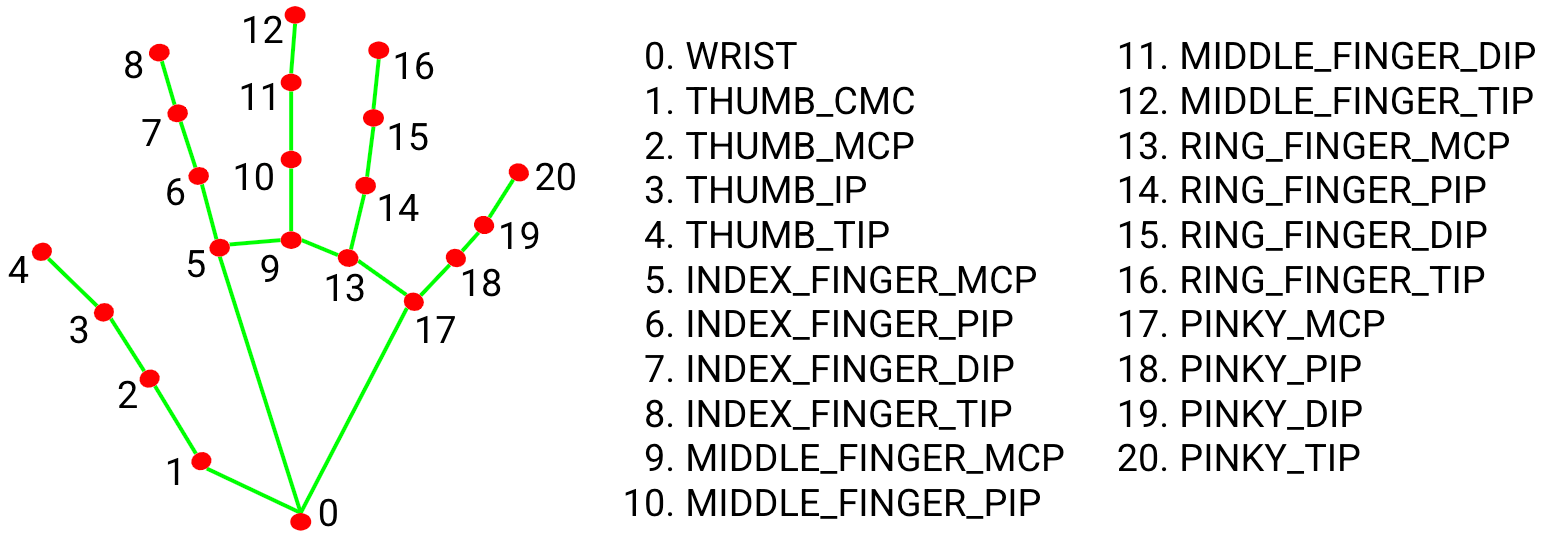

MediaPipe is a library that provides object detection and classification. They are pre-trained AI modules that run fast and efficiently. The hand library provide X,Y,Z coordinates of the hand landmarks below

Installation

The code is written in Python and uses Google Mediapipe and OpenCV library

This link describes installation

https://developers.google.com/mediapipe/solutions/setup_python

Download and install Python (needs to be version 3.8 - 3.11)

https://www.python.org/downloads/windows/

Install Pip

https://www.activestate.com/resources/quick-reads/how-to-install-pip-on-windows/

On windows command line

curl https://bootstrap.pypa.io/get-pip.py -o get-pip.py

python get-pip.py

or

py get-pip.py

Install Mediapipe

https://developers.google.com/mediapipe/solutions/setup_python

python -m pip install mediapipe

or

py -m pip install mediapipe

Running

With proper libraries installed you can run code by double clicking in Windows or on commandline by

py hands_2.py

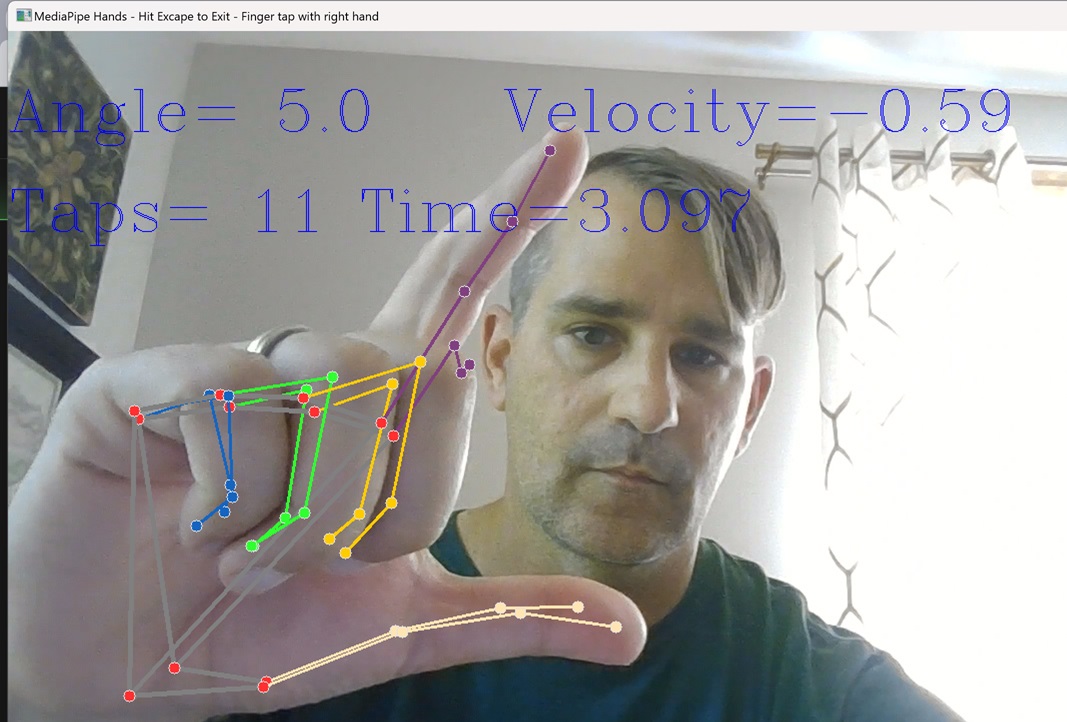

Output

The X,Y,Z coordinates for index finger tip and thumb finger tip is written to text file hands_output.txt

Change the delimeter variable to make parsing the output file easier for you.

The video output of the detection is written to media file hands_video_output.mp4

Input from camera or video file

In python code there is a commented out line that reads from SIMPLE_VIDEO.mp4 instead of camera. Modify the code to read from camera or a video.

cap = cv2.VideoCapture(0)

cap = cv2.VideoCapture('SIMPLE_VIDEO.mp4')

More info here https://github.com/google-ai-edge/mediapipe/blob/master/docs/solutions/hands.md

Reach out to me at chadhewitt@gmail.com for source code and questions